Machine Learning for dApps ?

This post is adapted from a talk I gave at the World Summit AI, 2019. Slides are embedded at the bottom of the post. I'll include the video if/when it becomes available.

First, let's align on what a "dapp" is. At the risk of over-simplifying, most applications can be thought to have the following:

- an identity layer, generally your email, social media account or phone number

- a storage layer that captures all your data and metadata. This tends to be hosted by the service provider and is often opaque to the user. Little known fact: even when services let you "export" your data, it's almost impossible for them to figure out all the different places where your data (and derivations thereof) might be scattered across all their different systems and services.

- finally, you have the actual business logic and presentation layer (UX).

The key point here is that the overall environment is trusted: we trust companies with our identity; we trust they will keep our data safe; we trust that distribution channels treat everyone in a fair and consistent manner etc. Of course, that's not always the case.

The core premise of dApps – decentralized applications – is to remove (or at least relax) this assumption of trust wherever possible. For instance, in the context of Blockstack:

- Identity is managed using a blockchain, owned by the user and free from control by any other entity.

- Storage is federated: users choose where their data goes, open protocols govern how data is encrypted at rest. Each application gets its own secure vault, with mechanisms for secure data sharing between applications.

- Rich ACLs govern data access: applications and services must explicitly get user permission and users can revoke those at any time.

In this model, users needn't trust any service providers or app developers (at least as far as identity and data is concerned).

And this isn't some fringe movement. In the past year alone, developers from all over the world have created over 270 dApps on Blockstack; there are dozens of projects out there trying to bootstrap their own ecosystems.

So, what does all this have to do with machine learning?

Well, most machine learning today is centralized: ML (especially deep learning) loves big data (which often happens to be user generated, and thus locked in platforms with large user-bases); a handful of companies have the quantity of data, the quality of ML expertise and the sheer computational resources to enable meaningful ML innovation.

On the other hand, most dApps today are created by indie hackers or small teams with limited data, expertise and resources. Furthermore, when users control their own data in a privacy conscious ecosystem, it becomes much harder to indiscriminately collect large data-sets.

As it happens, blockchains might offer some potential solutions here, and that's what I want to focus on in this post.

Blockchains come in all shapes and sizes. Rather than try to use some canonical definition, let’s focus on 3 key properties most blockchains provide:

- blockchains provide a mostly immutable log, which makes them great for transparency since you get a verifiable audit trail.

- blockchains let multiple entities coordinate in a trust-less, permission-less environment. This is great for marketplaces as more entities can now participate and expect a fair outcome.

- with tokens and smart contracts – programmable money – blockchains are great at codifying incentives.

With those properties in mind, let's see how blockchains might help democratize ML. Let's start with an easy scenario first.

Say you’re building a photo gallery dApp and want to let users search their photos. Thankfully there are readily available datasets, many pre-trained models and dozens of commercial APIs you can use. Easy, right?

Setting aside cost, I personally have been bitten by 3rd party APIs silently changing their behavior. And this lack of transparency extends to the datasets themselves.

Researchers at the AI Now institute published a fascinating report that looks into public image datasets.

At the image layer of the training set, like everywhere else, we find assumptions, politics, and worldviews. According to ImageNet, for example, Sigourney Weaver is a “hermaphrodite,” a young man wearing a straw hat is a “tosser,” and a young woman lying on a beach towel is a “kleptomaniac.” But the worldview of ImageNet isn’t limited to the bizarre or derogatory conjoining of pictures and labels.

There are countless other examples that range from funny and bizarre to dark and outright disturbing.

So one potential solution here is to develop models in the open, with full transparency. Microsoft research recently launched a prototype system – decentralized & collaborative AI – running on top of the Ethereum blockchain that does just that.

The basic idea is users are incentivized to add data that improves the model. All data and meta-data is stored on the blockchain, so it’s available for use in other models too. Whenever someone wants to add new data, it goes through 3 steps. First, an incentive mechanisms checks whether the new data actually improves the model — the network penalizes bad actors to reduce bad or noisy data. Next, the data handler persists the data and metadata on chain. Finally, the model is updated.

Now, obviously this is not without limitations. Many datasets and models are just too large to be effectively stored on a blockchain. Further, this assumes you have some model and labeled data as a starting point. What if you don’t?

Let’s say you want to build a personal wealth manager dApp that offers ML-driven insights into your spending and investments. You have a cold-start problem — you have no existing data to train models on. How can we train models on private user data or incentivize fair, transparent, responsible data collection? Even if you did have some raw data, how would you label it? Relying on 3rd party contractors (ala AWS Mechanical Turk) for such labeling can lead to transparency and fairness issues.

Luckily for us, blockchains are great at codifying incentives via tokens and smart contracts.

One way out of the cold start problem is to incentivize the collection of data via a marketplace. There are many projects trying to tackle this problem, a relatively new one is called Computable.

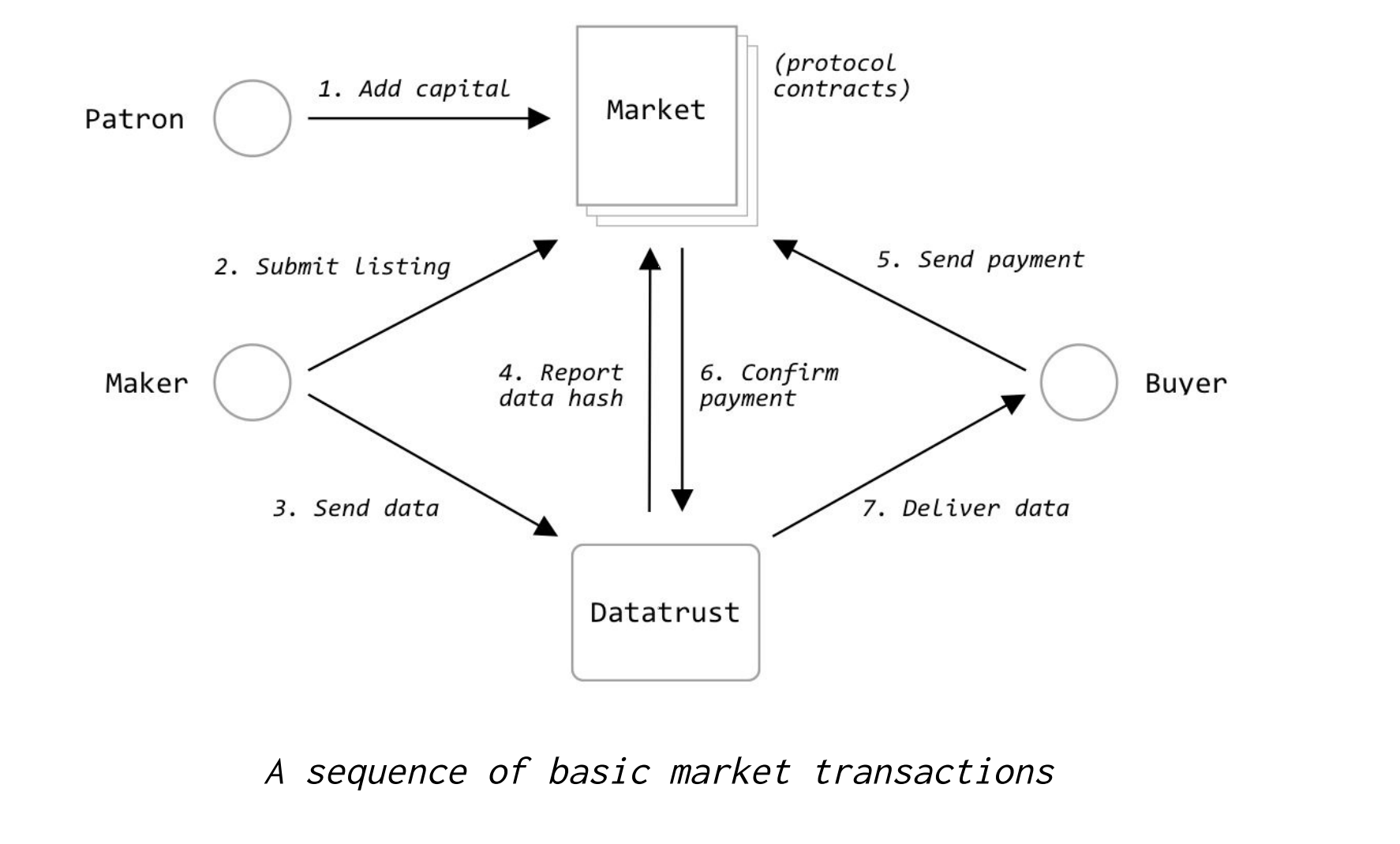

In this model, different entities and their interactions are governed by a series of smart contracts.Patrons can bootstrap the process by adding some capital into the market. They get a share of all data access transactions. On the supply side, makers submit a listing to the market, and the actual data to a datatrust — an off-chain entity that handles data storage. The Datatrust verifies data and sends a hash of the data to the market, confirming receipt and linking the on-chain listing to the off-chain store. Makers earn listing rewards and a portion of the capital provided by patrons. On the demand side, buyers send a payment to the market, which confirms the payment with the datatrust, which then delivers the data to the buyer. The listing owner receives an appropriate share of the payout. All this is mediated through smart contracts and all incentives are aligned around how useful the data is.

Alright, so this is potentially interesting for incentivizing data collection. Can we extend this marketplace idea to algorithms and services too?

One of the bigger projects in this space is called the Ocean Protocol.

Ocean is an ecosystem for the data economy and associated services, with a tokenized service layer that securely exposes data, storage, compute and algorithms for consumption.

Ocean aims to facilitate (using smart-contracts) a rich ecosystem of both data and services. Ocean allows bringing compute to the data — basically providers deliver a packaged training algorithm that consumers can run on-premise to avoid data leakage.

You can actually check out this hosted Jupyter instance (https://datascience.oceanprotocol.com/) to see how all this comes together. There’s also a “data commons” marketplace to see Ocean in practice. And if you’d like to participate in Ocean, now is a great time because they recently announced a “Data Economy Challenge”! Ocean has also lined up an impressive array of corporate and government partners, especially in Asia.

But let’s face it, these systems are still early. There is still a lot of friction and inertia to overcome. The onus is on the ML community to push for leveling the playing field and demanding more transparency. Next time you find yourself in need of Mechanical Turk, ask yourself if you can use one of these marketplaces instead.

Strange Loops

And thus we come full-circle. I introduced the notion of dapps — decentralized applications — that leverage blockchain and other technologies to put users in control of their identity and data. We looked at some challenges imposed by this constrained environment where most data and compute is localized at the edge. And we saw how blockchains can potentially help alleviate some of those pain points by facilitating fair, transparent, trust-less marketplaces of data, algorithms and services.